The challenge with traditional document management is the friction of information retrieval. Searching for a specific insight within a 500-page document is inefficient. OpsVerseAI was built to solve this by providing an interface where users can query their documents using natural language.

This article explores the underlying architecture of OpsVerseAI and how it implements the RAG (Retrieval-Augmented Generation) pattern.

The RAG Pipeline: How It Works

Retrieval-Augmented Generation bridges the gap between static LLM knowledge and private, modern data. The process in OpsVerseAI follows three primary stages:

1. Ingestion and Embedding

When a user uploads a PDF, the system extracts the raw text and breaks it into smaller, overlapping chunks. These chunks are then converted into high-dimensional numerical vectors (embeddings) using a model like text-embedding-004.

2. Vector Storage (Pinecone)

These embeddings are stored in Pinecone, a specialized vector database. Unlike traditional SQL databases, Pinecone is optimized for similarity searches, allowing the system to find the most relevant "chunks" of text for a given query in milliseconds.

3. Retrieval and Generation

When a user asks a question, the query itself is embedded. The system then retrieves the top matching chunks from Pinecone. These chunks are provided to the LLM (Gemini) as "context" to generate a grounded, accurate response.

Technical Stack

- Framework: Next.js (App Router) for a seamless, serverless-ready frontend and backend.

- Intelligence: Google Gemini for high-context window reasoning.

- Vector Database: Pinecone for efficient semantic retrieval.

- Authentication: Clerk for secure, multi-tenant user management.

- Payments: Stripe for subscription-based feature gating.

Key Implementation Details

Handling Large PDFs

One of the primary difficulties in PDF chat apps is maintaining context across large files. OpsVerseAI uses a sliding window chunking strategy:

const chunks = await textSplitter.splitText(pdfContent);

// Each chunk includes metadata and overlap to ensure semantic continuity

Secure Webhooks with Stripe

To manage premium access, OpsVerseAI utilizes Stripe webhooks to listen for successful checkouts and update user permissions in real-time.

Security Tip: Always verify the Stripe signature to prevent spoofed webhook events from granting unauthorized access.

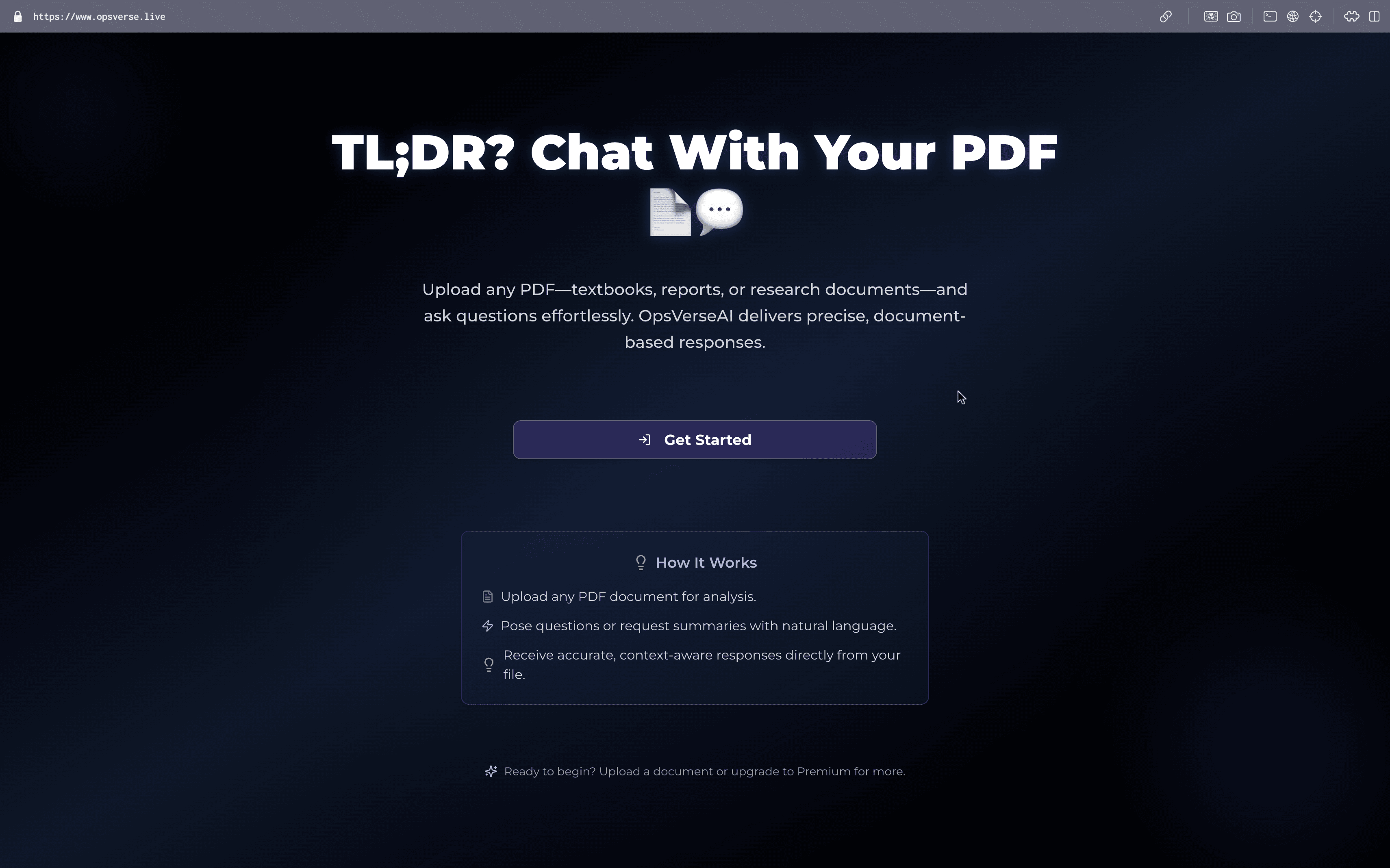

The User Interface

The design of OpsVerseAI prioritizes readability and ease of use. The chat interface is built with Framer Motion for smooth transitions, mirroring the experience of modern AI platforms like ChatGPT.

OpsVerseAI represents the shift from document storage to document intelligence. It is no longer about where your files live, but how easily they can be queried.

Future Roadmap

- Multi-Document Reasoning: The ability to query an entire library of PDFs simultaneously.

- Support for More Formats: Expanding beyond PDF to Markdown, DOCX, and Notion exports.

- Source Citations: Precise page and paragraph references for every AI response to ensure transparency.